Researchers have developed a computer can that identify these sketches better than a human

Sketch-a-Net's research team, Qian Yu, Yongxin Yang , Yi-Zhe Song, Tao Xiang, and Timothy Hospedales, weren't always sure that a machine could beat humans at image recognition. There is a limit to how good people are at drawing.

Recognizing free-hand drawings at all is pretty tough. A black-and-white sketch of a person, for example, could be an abstract stick man or a highly-detailed portrait. Sketches also don't have some of the visual cues — like coloured pixels — that photographs do.

This image comes under the category "person walking."

Working out of the London-based university's computer science lab, the team fed labelled examples from pre-defined categories of sketches into the artificial neurons of their network.

A pretty passable pineapple.

They used a data set from the Technical University of Berlin, which had used 20,000 hand-drawn images to test how well human beings could recognise sketches.

Hands are particularly difficult to draw. Here's the TU Berlin dataset in full.

The researchers reflected or stretched each of these images slightly, giving the software 31,000,000 sketches to work from. Hospedales says that the reason artificial neural networks are getting better is because so much data is available.

The candles are supposed to make this a cake, but it really could be any kind of dessert.

Mimicking the way the brain learns, artificial neural networks like Sketch-a-net build on what they already know each time they perform a new task. This is how they are able to recognise images.

This is a pretty good drawing of a brain, but could always be mistaken for a cauliflower.

But in order to create a programme that is able to accurately recognise sketches, the team had to work in certain characteristics of drawings. Unlike photographs, which consist of pixels which appear all at once, the online drawing process happens in a series of strokes.

Someone might choose to draw the outline of a car first, for example.

According to the team's paper, this is one of the ways humans are able to identify a drawing, but none of the existing approaches to sketch recognition had factored this in.

Definitely Spongebob Squarepants.

Each 2D layer of pixels holds the image of a stroke, and these are stacked on top of each other to make a 3D array of pixels. This shows the programme what order they were made in.

The programme recognised that the pointed nib on this object made it a pen.

Sketch-a-Net is able to "see" the sequence of strokes because they are represented in the same way as colour is stored by computers: as the third dimension in an array of pixels.

Whale? Stingray? Shark? Bird? It's not hard to guess this is supposed to be a plane.

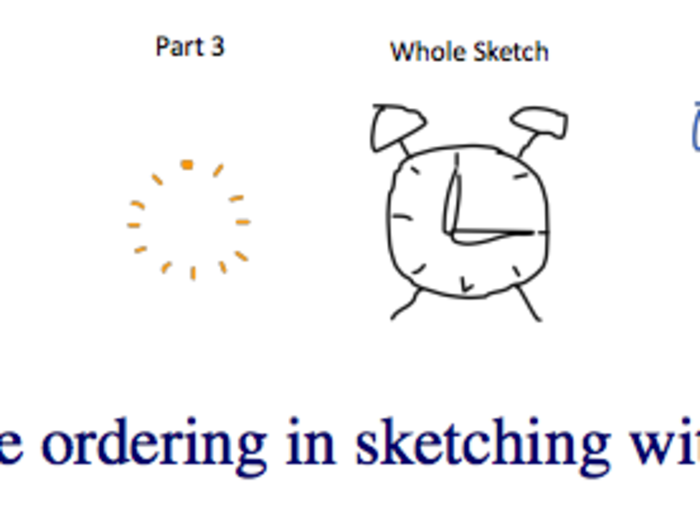

Here is one example of how the software uses the order in which strokes are drawn to identify a free-hand drawing of an alarm clark.

A lot of these sketches seem easily identifiable to humans, but that's because we picked out the less challenging ones. The programme also had to identify badly-drawn sketches (like the one below), which could have been harder for humans to recognise.

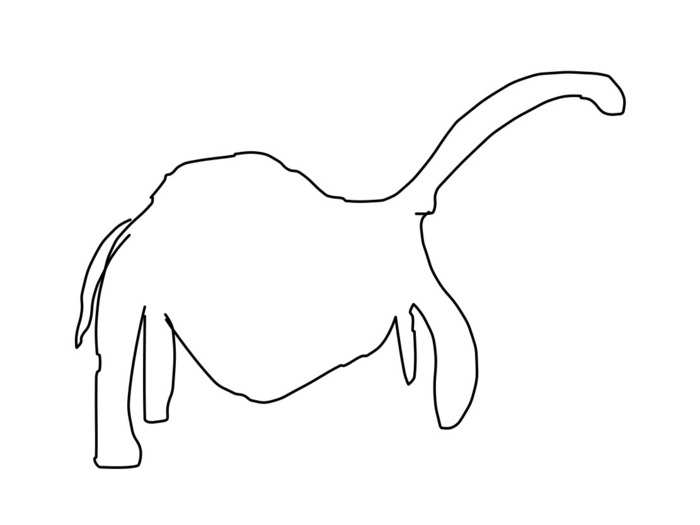

This, however, is supposed to be a giraffe.

The programme outperformed humans in certain categories. For "seagull," "flying-bird," "standing-bird," and "pigeon," all of which belong to the coarse semantic category of "bird," Sketch-a-Net had an average accuracy of 42.5% while humans only achieved 24.8%.

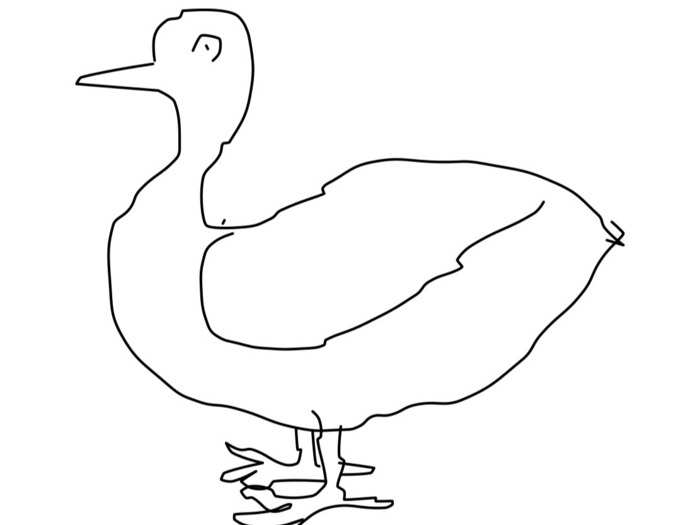

A lot of the birds in TU Berlin's dataset look pretty similar. This is supposed to be a pigeon, but looks more like a duck.

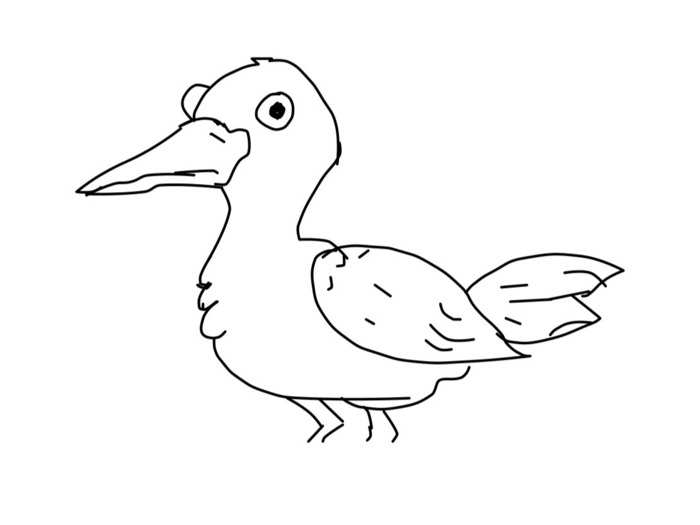

In particular, the category "seagull," was the worst performing category for humans with an accuracy of just 2.5%. According to the team's paper, it was often confused with other types of birds. In contrast, Sketch-a-Net yielded 23.9% for "seagull," which is nearly 10 times better.

This is supposed to be a seagull, but also looks more like a duck.

Hospedales said that while this study in particular doesn't have any commercial applications, it has informed some of the other work the team is doing. They believe the technology could be used to create better sketch-based facial recognition programmes for police.

This person is talented.

This sort of tech could also be used to make online shopping a whole lot easier. Rather than relying on keywords when looking for a certain type of shoe or sofa, Hospedales sees people sketching out an image of the product they want.

You could search for this shoe by drawing it on your tablet.

Popular Right Now

Popular Keywords

- India’s wearables market decline

- Vivo V40 Pro vs OnePlus 12R

- Nothing Phone (2a) Plus vs OnePlus Nord 4

- Upcoming smartphones launching in August

- Nothing Phone (2a) review

- Current Location in Google

- Hide Whatsapp Messages

- Phone is hacked or not

- Whatsapp Deleted Messages

- Download photos from Whatsapp

- Instagram Messages

- How to lock facebook profile

- Android 14

- Unfollowed on Instagram

Advertisement