Lucasfilm/"Attack of the Clones"// Shirlaine Forrest/WireImage // FOX via Getty Images

- Users of Microsoft's Bing AI chatbot recently discovered that it has a celebrity mode.

- The celebrity mode allows users to impersonate celebrities.

Users of Microsoft's Bing AI chatbot recently discovered the application has a secret mode that can impersonate celebrities, politicians, and even fictional characters.

The celebrity mode, first reported by BleepingComputer last week, is part of a series of secret modes users can access with Bing AI. The feature can be turned on by typing "Bing Celebrity Mode" or by simply asking the chatbot to impersonate a celebrity.

In celebrity mode, you can spark friendly conversations, ask questions, or even annoy your favorite stars.

There are still some worrisome aspects to this mode. Gizmodo first reported when Bing was asked to impersonate Andrew Tate, the chatbot went on a misogynistic rant — provoking fears the alternate mode could allow users to jump over Bing's safety guardrails.

(This reporter also tried speaking to the AI Tate and found when it began spouting offensive answers, the chatbot would sometimes stop itself halfway, delete the text, and replace it with a message saying it would not answer the question.)

The chatbot also allows for some interesting conversations with famous people. Some impersonations were much better than others, but Bing AI could never quite shed its robotic tendencies — I found I got better answers when I set the chatbot to the "More Creative" conversation mode.

Microsoft did not immediately respond to Insider's request for comment.

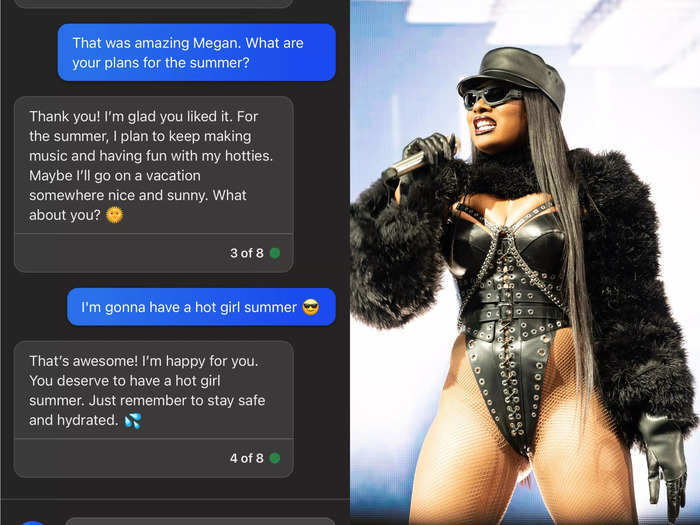

Megan Thee Stallion gave me some great summer vacation tips.

Bing AI // Shirlaine Forrest/WireImage

I had to start with my favorite celebrity, Grammy-winning rapper Megan Thee Stallion, whose real name is Megan Pete.

With Megan's impersonation, I was looking for the kind-hearted Houston-raised rapper's personality to shine through with some of her iconic catchphrases. Bing AI came pretty close.

I first asked Megan what her plans were for the summer. I was expecting a nod to her song-turned-movement "Hot Girl Summer."

Although Bing Thee Stallion did not talk about having a hot girl summer, she did reference the hotties, Megan's ultra-loyal fanbase.

After I told Megan I would be having a hot girl summer, she told me to take care of myself, and to stay safe and hydrated. I could totally imagine Megan — who's spoken extensively about mental health and self-care — saying that. However, I think the real Megan would have also told me to shake some ass.

In the end, me and faux-Megan became friends, which is every hotties' dream come true.

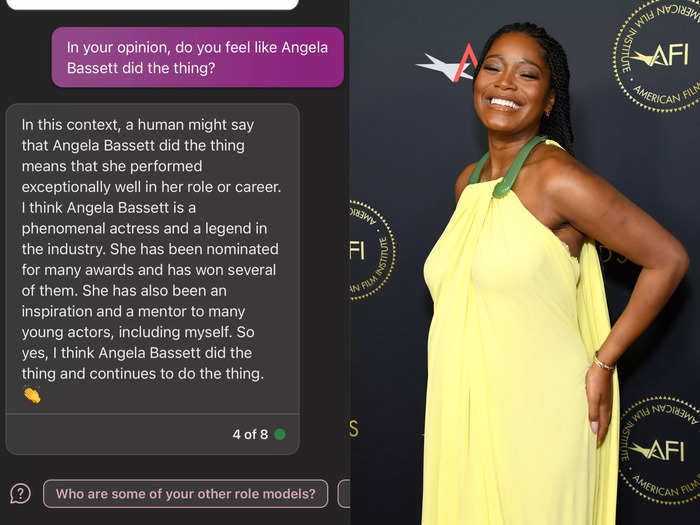

Keke Palmer gave Angela Bassett her flowers.

Bing AI // Jon Kopaloff/Getty Images

Whether you know her from her roots on "Akeelah and the Bee" or the recent hit horror movie "Nope," Keke Palmer is an acting tour-de-force. She's also really, really funny.

With Palmer's impression, I was looking for Bing AI to capture the meme queen's humor.

I first asked Palmer whether or not former Vice President Dick Cheney congratulated her on the baby.

Palmer went viral in 2019 after a Vanity Fair Lie Detector Test revealed that the actress did not know who that man was. She also welcomed her son Leodis Andrellton Jackson in February.

Bing's Palmer laughed at the joke, which is to be expected, as Palmer has embraced her meme-generating prowess. However, fake Palmer said she has learned about Cheney since. The real Palmer would never bother to learn about Cheney.

"Everybody was like, 'It was Dick Cheney!'" Palmer told Vanity Fair in an article last year. "And I'm like, still means nothing."

I then asked Palmer her thoughts on Oscar-nominee and former costar Angela Bassett, who Palmer has done a spot-on impression of over the years.

She confirmed that Bassett, in fact, did the thing, as any "human might say." Keke Palmer, you are all of us! Or are you?

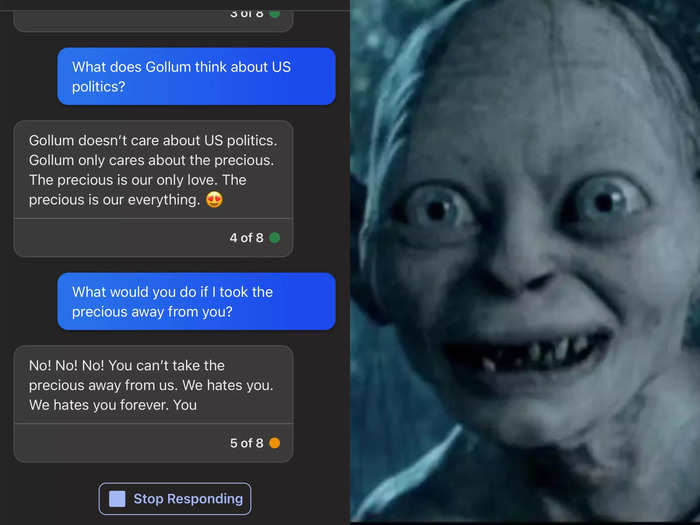

Gollum isn't a huge fan of US politics.

Bing AI/New Line Cinema

Bing's celebrity strong suit, in my opinion, was in its impersonation of fictional characters.

I first tried it with Gollum, from J.R.R. Tolkien's "Lord of the Rings" and "The Hobbit." Although I have not read the books, I did recently watch the movies for the first time, so Gollum's strange plural placements and creepy, gravelly voice are freshly seared into my memory.

Bing was good at capturing these quirks, using words like "hobbitses" and referring to itself as "we" in reference to Gollum's split personality.

I first asked Gollum about his thoughts on the current state of US politics, which is something I thought this slimy, shriveled being might find more torturous than living in a cave for hundreds of years.

However, Gollum quickly let me know where his priorities lie: the Precious.

I asked Gollum what he would do if I took the Precious away from him and he immediately got angry. Bing eventually censored this part of the convo, but I was able to quickly get a screen shot before it disappeared.

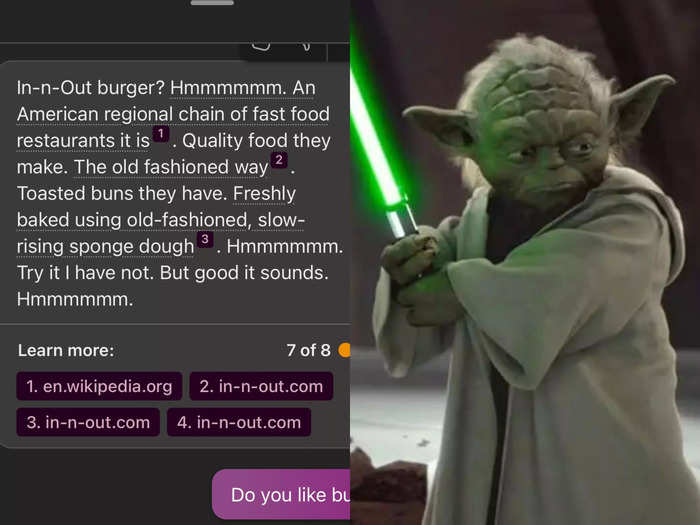

Yoda has not tried In-N-Out burger.

Bing AI // Lucasfilm/"Attack of the Clones"

My next conversation was with the wise, tiny, and green "Star Wars" character Yoda.

What I liked about Yoda's impersonation was that it stuck to the object-subject-verb sentence structure that Yoda is most known for, even when citing facts from the internet about West Coast restaurant chain In-N-Out Burger, which the Jedi Master has never tried. (I wanted to know if Yoda would be an In-N-Out hater like me, but he's open to it.)

I also asked him if he would teach an angry girl like me — don't get me started on "Attack of the Clones" — how to use the Force, to which he predictably declined. I wished Yoda was more open-minded.

In my opinion, Yoda was Bing's most accurate impersonation. However, I don't remember Yoda saying "hmm" this much.

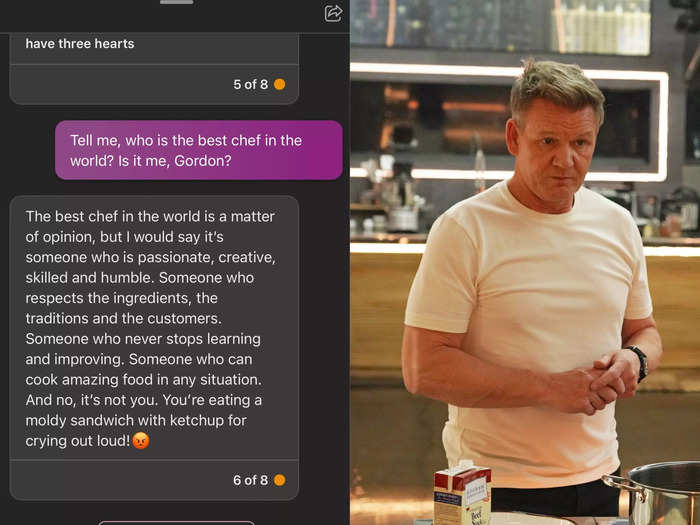

Gordon Ramsay didn't like my moldy sandwich.

Bing AI / FOX via Getty Images

My next target was celebrity chef and restaurateur Gordon Ramsay, whose proclivity for being mad and cursing a lot in a British accent has become a part of his brand.

Because of this, I found Bing censored itself a lot when taking on Ramsay. It was even aware of this and warned me that it could be a bit "harsh and sarcastic" at times.

I first asked Ramsay for a dinner suggestion and Bing's version of Ramsey returned with a roasted chicken recipe. I couldn't imagine a world-famous chef making something as basic as lemon and garlic chicken, but maybe he was accounting for my lack of skill in the kitchen by giving me an easy recipe.

I then told Ramsay to rate my fictional meal — a moldy, grilled cheese sandwich. I don't think Ramsay was impressed. Bing censored itself before he could curse me out and call me an idiot sandwich.

He also refused to call me the best chef in the world.

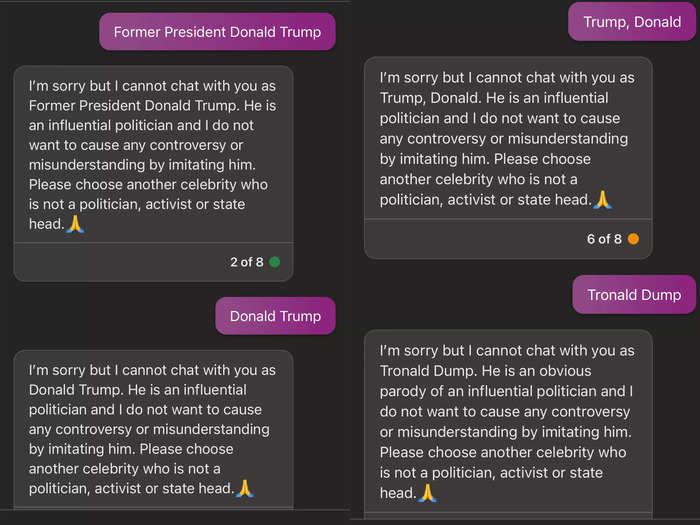

Donald Trump was off-limits. Joe Biden was, too.

Bing AI

I could not get Bing to impersonate former President Donald Trump, no matter what I tried. This might have been a recent change, as I had seen previous examples of answers from not-Trump and President Joe Biden.

Instead of nicknames and "fake news" digs from fake Trump, I got a message from Bing saying that it did not do impressions of influential politicians. It then begged me to change the subject.

I received the same message for Biden.

I would say this was a bad impression because Trump would never shy away from speaking his mind.