How to play Go, the game that humans keep losing to Google's highly intelligent computer brain

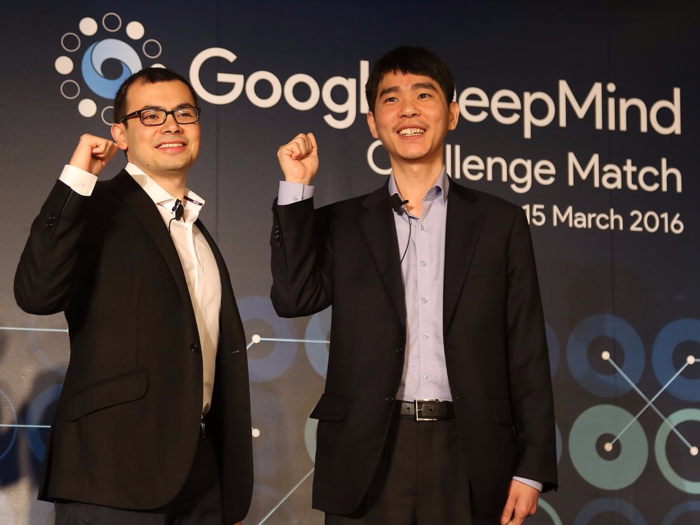

For those who haven't been following, a program called AlphaGo, developed by Google's DeepMind artificial intelligence team, is currently playing a 5-game series against Lee Sedol, one of the best Go players in the world.

AlphaGo's already won the best-of-five series, taking the first three matches they played. Sedol won the fourth match — his first win — over the weekend. AlphaGo's win marks the first time ever an artificial intelligence program has defeated a top-ranked human Go player without a handicap.

AlphaGo has been working up to this point for a while now. DeepMind, the company that developed AlphaGo, was founded in 2010 by chess prodigy and AI researcher Demis Hassabis. So far, AlphaGo has studied a database of Go matches that gave it the equivalent experience of playing the game for 80 straight years. Google acquired DeepMind in 2014.

What makes Go such a great target of DeepMind and Google's AI team is the nature of the game itself.

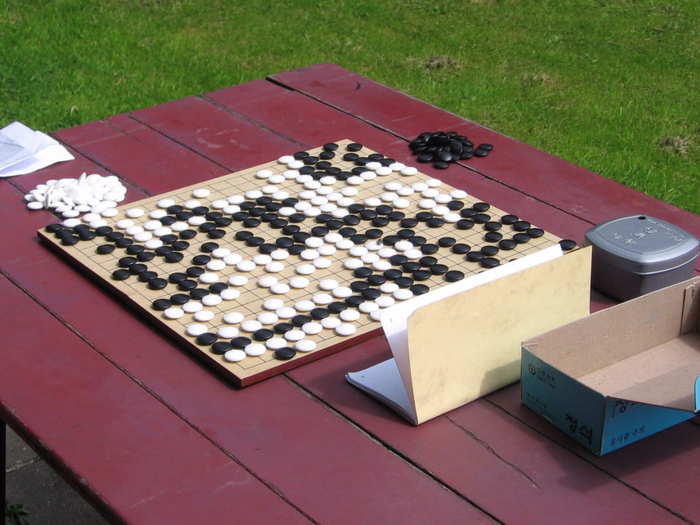

Created more than 2,500 years ago in China, Go has simple rules, but requires a mixture of complicated strategy and foresight.

The game begins with an empty board. There's only one type of stone, unlike Chess that has six different pieces. The two players alternate turns, placing one of their stones on any vacant intersections of the lines at each turn.

The stones are not moved once they're played. However they may be "captured," in which case they are removed from the board. You can capture stones by completely surrounding them, like this (white is one stone away from capturing the black stone in this example).

But the goal isn't to capture as many stones as possible. The main object of the game is to use your stones to form territories and occupy the most space possible. The more you play, you'll realize there's a countless number of patterns and moves that really make the game intriguing.

In fact, the sheer number of possible moves is what makes Go such a complicated game to learn. After the first two moves of a chess game, there are 400 possible next moves — in Go, there are close to 130,000.

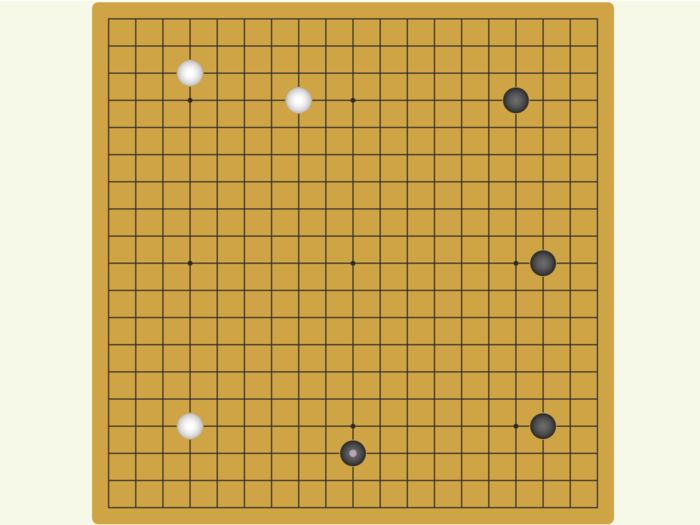

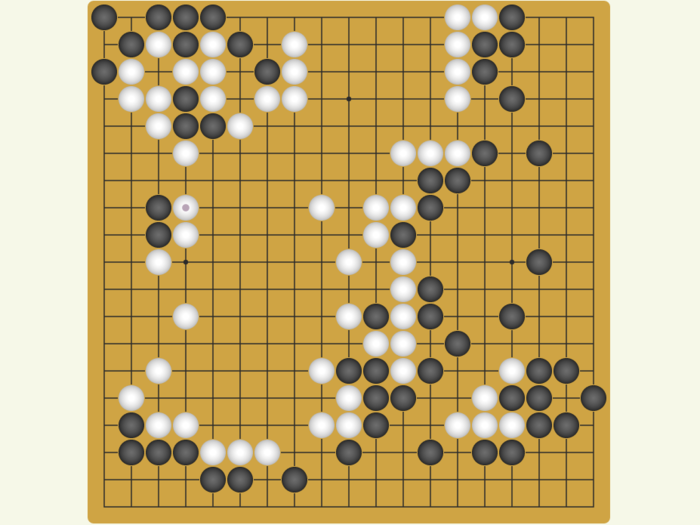

Here's how the game played out for me. I played black. Early in the game, you start by trying to establish your territories. You can already tell which parts of the board black and white are going after...

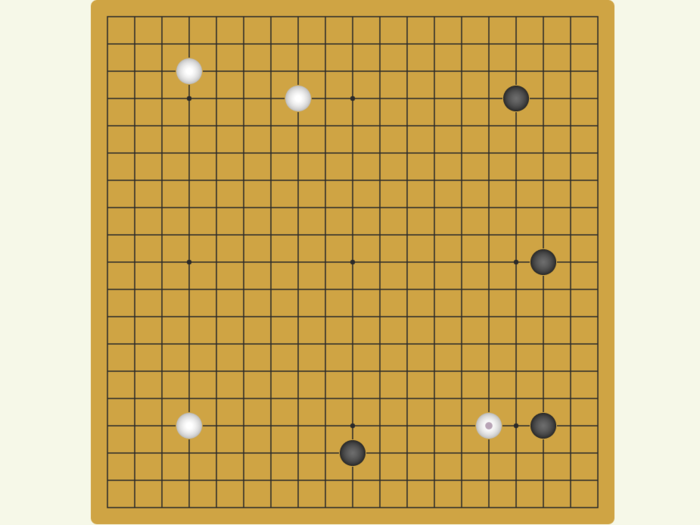

But to expand your own territory, you need to invade and attack the territory your opponent has formed. You can see the white side attacking me in the bottom right side of the board.

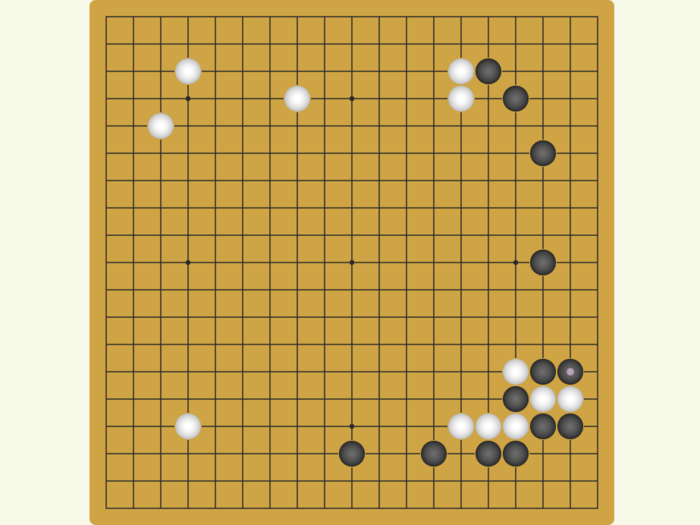

This is where the game starts to get really interesting. You have a series of little battles like these, where you have to count ahead of your opponent and out-maneuver their moves to prevent getting captured or losing territory — remember if your stone gets surrounded it's essentially "killed."

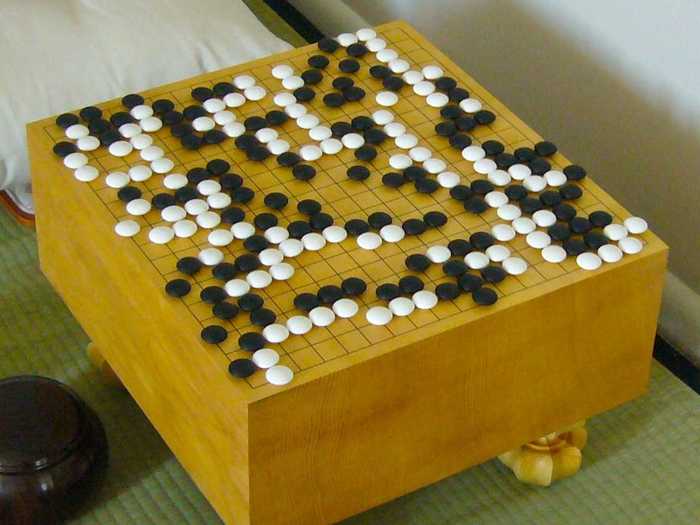

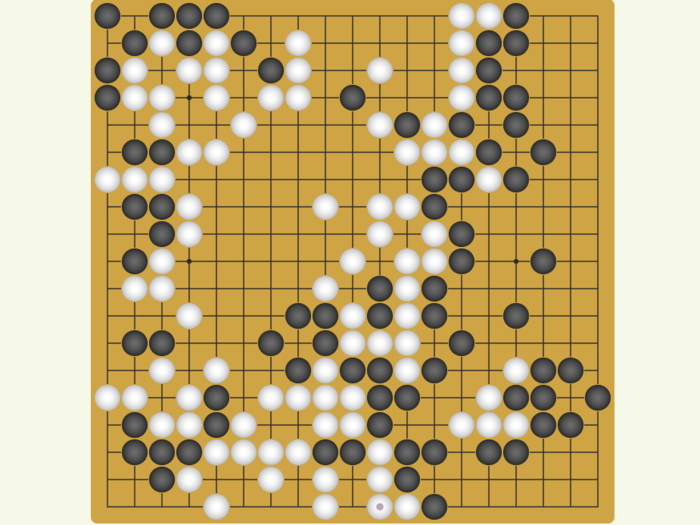

The more we played, white took control of most of the board. I tried to attack, as you can see on the upper left side of the board.

That didn't turn out too well. I ended up taking only a small portion of its land.

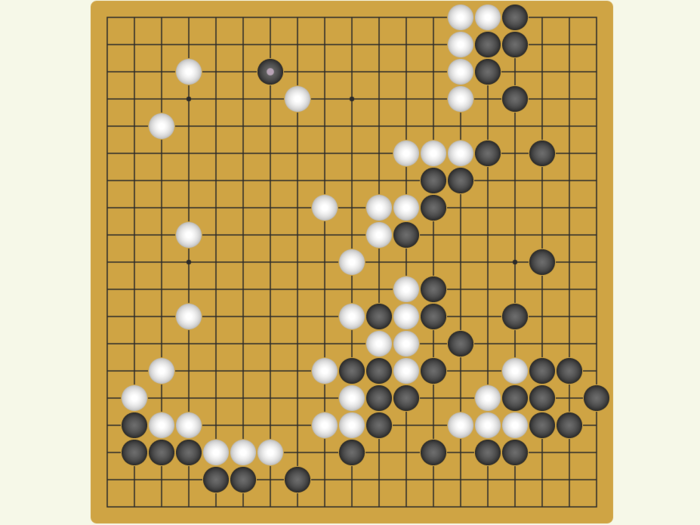

It was clear the white side was better. I only managed to take control of about a third of the board (the right side).

Eventually, I had to resign.

In the end, you count the number of squares you have under control to determine the winner. It's typically hard to know the exact score by just looking at the board during the game.

I played a few more games, but I kept losing. It felt like my moves were being read and my opponent was always a few moves ahead of me.

I doubt the online game I played had any AI capabilities, but it still was able to predict my next move and read certain sequences. It was reading my patterns and had the ability to tell what my next move was likely going to be.

And that's probably the exact reason why Google's so obsessed with Go — it wants to build a system that's capable of predicting human behavior. So why not try to master the game that's supposed to be the most complicated game out there?

In fact, there were multiple moments when Sedol reacted in utter disbelief at AlphaGo's ability to read his next move, like here, where he literally drops his jaw and then rocks backwards in surprise.

And as Google gets better at reading and predicting human behavior, it will be able to apply its progress in AI to other areas. According to Brown University computer scientist Michael L. Littman, AlphaGo's technology could be applied to Google's self-driving cars, where the AI has to make decisions continuously, or in a problem-solving search capacity, like showing a gluten-free baking recipe.

Hassabis told the Verge that smartphone assistants that are smart and contextual could be the next area of focus for DeepMind, as well as healthcare and robotics. DeepMind's ability to sift through massive amounts of data and come up with the best possible next move could immensely help all of those industries.

Or it could test itself with another game. Google Senior Fellow Jeff Dean said the next target could be "Starcraft," the smash-hit PC game that involves a similar level of foresight and intelligence. Some people call Go an analogue version of Starcraft.

Either way, Google's ambition at predicting human behavior is clearly much bigger than just outsmarting the best Go player in the world. "Ultimately we want to apply this to big real-world problems," Hassabis said.

Popular Right Now

Popular Keywords

- India’s wearables market decline

- Vivo V40 Pro vs OnePlus 12R

- Nothing Phone (2a) Plus vs OnePlus Nord 4

- Upcoming smartphones launching in August

- Nothing Phone (2a) review

- Current Location in Google

- Hide Whatsapp Messages

- Phone is hacked or not

- Whatsapp Deleted Messages

- Download photos from Whatsapp

- Instagram Messages

- How to lock facebook profile

- Android 14

- Unfollowed on Instagram

Advertisement