56 Cognitive Biases That Screw Up Everything We Do

Affect heuristic

Anchoring bias

People are overreliant on the first piece of information they hear.

In a salary negotiation, for instance, whoever makes the first offer establishes a range of reasonable possibilities in each person's mind.

Any counteroffer will naturally be anchored by that opening offer.

Availability heuristic

When people overestimate the importance of information that is available to them.

For instance, a person might argue that smoking is not unhealthy on the basis that his grandfather lived to 100 and smoked three packs a day.

Bandwagon effect

The probability of one person adopting a belief increases based on the number of people who hold that belief. This is a powerful form of groupthink — and it's a reason meetings are often unproductive.

Blind-spot bias

Failing to recognize your cognitive biases is a bias in itself.

Notably, Princeton psychologist Emily Pronin has found that "individuals see the existence and operation of cognitive and motivational biases much more in others than in themselves."

Choice-supportive bias

When you choose something, you tend to feel positive about it, even if the choice has flaws. You think that your dog is awesome — even if it bites people every once in a while — and that other dogs are stupid, since they're not yours.

Clustering illusion

This is the tendency to see patterns in random events. It is central to various gambling fallacies, like the idea that red is more or less likely to turn up on a roulette table after a string of reds.

Confirmation bias

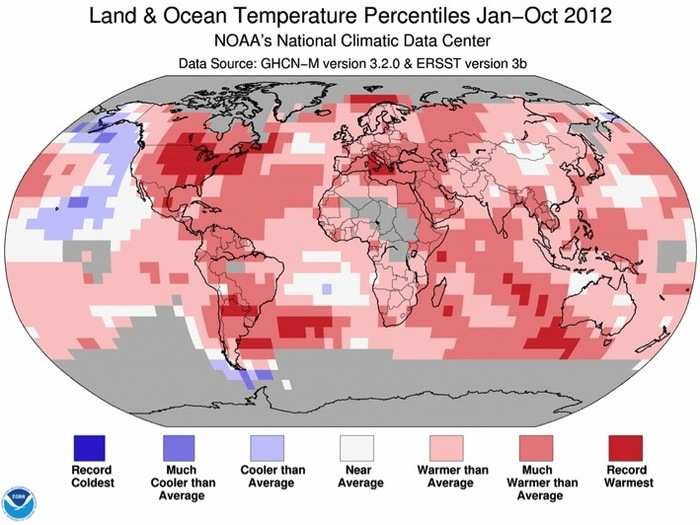

We tend to listen only to the information that confirms our preconceptions — one of the many reasons it's so hard to have an intelligent conversation about climate change.

Conformity

This is the tendency of people to conform with other people. It is so powerful that it may lead people to do ridiculous things, as shown by the following experiment by legendary psychologist Solomon Asch.

In experiments, he found that three-quarters of people would say that two lines of different length are actually the same length — so long as everybody else in the room is saying so.

Conservatism bias

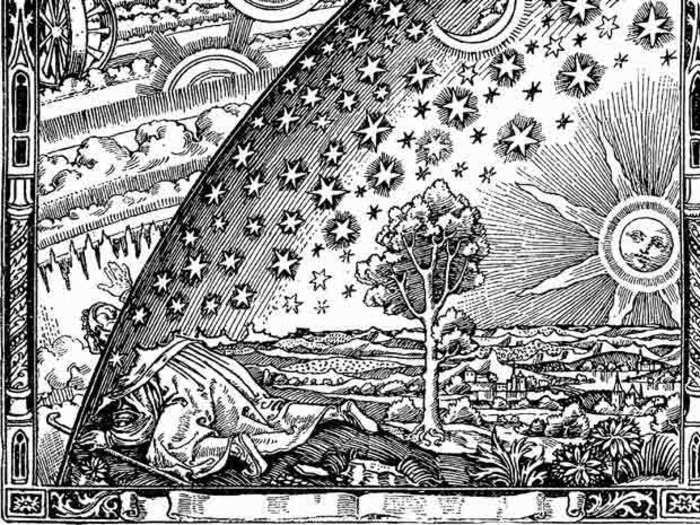

Where people believe prior evidence more than new evidence or information that has emerged. People were slow to accept the fact that the Earth was round because they maintained their earlier understanding that the planet was flat.

Curse of knowledge

When people who are well-informed cannot understand the common man. For instance, in the TV show "The Big Bang Theory," it's difficult for scientist Sheldon Cooper to understand his waitress neighbor Penny.

Decoy effect

A phenomenon in marketing where consumers have a specific change in preference between two choices after being presented with a third choice.

Offer two sizes of soda and people may choose the smaller one; but offer a third even larger size, and people may choose what is now the medium option.

Denomination effect

People are less likely to spend large bills than their equivalent value in small bills or coins.

Duration neglect

When the duration of an event doesn't factor enough into the way we consider it.

For instance, we remember momentary pain just as strongly as long-term pain.

Empathy gap

Where people in one state of mind fail to understand people in another state of mind. If you are happy, you can't imagine why people would be unhappy. When you are not sexually aroused, you can't understand how you act when you are sexually aroused.

Frequency illusion

Where a word, name, or thing you just learned about suddenly appears everywhere. Now that you know what that SAT word means, you see it in so many places!

Fundamental attribution error

This is where you attribute a person's behavior to an intrinsic quality of her identity rather than the situation she's in.

For instance, you might think your colleague is a fundamentally angry person, when she is really just upset because she stubbed her toe.

Galatea effect

Where people succeed — or underperform — because they think they should.

Call it a self-fulfilling prophecy. For example, in schools it describes how students who are expected to succeed tend to excel and students who are expected to fail tend to do poorly.

Hard-easy bias

Where everyone is overconfident on easy problems and not confident enough for hard problems.

Halo effect

Where we take one positive attribute of someone and associate it with everything else about that person or thing.

Hindsight bias

Of course Apple and Google would become the two most important companies in phones. Tell that to Nokia, circa 2003.

Hyperbolic discounting

The tendency for people to want an immediate payoff rather than a larger gain later on.

Ideometer effect

Where an idea causes you to have an unconscious physical reaction, like a sad thought that makes your eyes tear up. This is also how Ouija boards seem to have minds of their own.

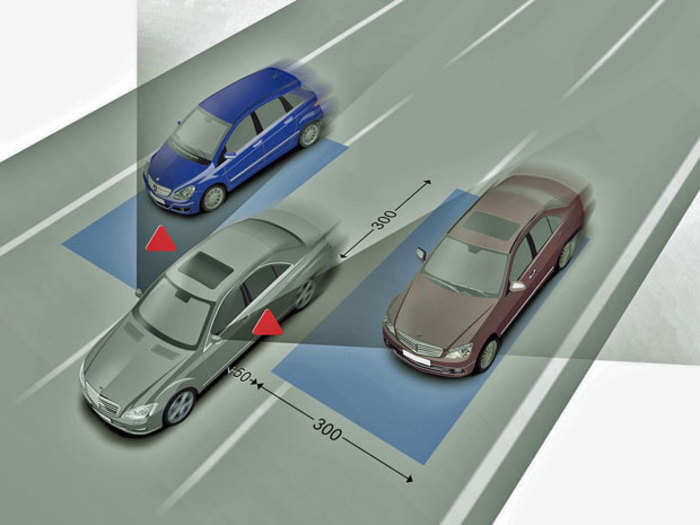

Illusion of control

The tendency for people to overestimate their ability to control events, like when a sports fan thinks his thoughts or actions had an effect on the game.

Information bias

The tendency to seek information when it does not affect action. More information is not always better. Indeed, with less information, people can often make more accurate predictions.

Negativity bias

The tendency to put more emphasis on negative experiences rather than positive ones. People with this bias feel that "bad is stronger than good" and will perceive threats more than opportunities in a given situation.

Psychologists argue it's an evolutionary adaptation — it's better to mistake a rock for a bear than a bear for a rock.

Observer-expectancy effect

A cousin of confirmation bias, here our expectations unconsciously influence how we perceive an outcome.

Researchers looking for a certain result in an experiment, for example, may inadvertently manipulate or interpret the results to reveal their expectations.

Omission bias

The tendency to prefer inaction to action, in ourselves and even in politics.

Psychologist Art Markman gave a great example in 2010:

In March, President Obama pushed Congress to enact sweeping health care reforms. Republicans hope that voters will blame Democrats for any problems that arise after the law is enacted.

But since there were problems with health care already, can they really expect that future outcomes will be blamed on Democrats, who passed new laws, rather than Republicans, who opposed them? Yes, they can — the omission bias is on their side.

Ostrich effect

The decision to ignore dangerous or negative information by "burying" one's head in the sand, like an ostrich.

Outcome bias

Judging a decision based on the outcome — rather than how exactly the decision was made in the moment. Just because you won a lot at Vegas, doesn't mean gambling your money was a smart decision.

Overconfidence

Some of us are too confident about our abilities, and this causes us to take greater risks in our daily lives.

Overoptimism

When we believe the world is a better place than it is, we aren't prepared for the danger and violence we may encounter. The inability to accept the full breadth of human nature leaves us vulnerable.

Pessimism bias

This is the opposite of the overoptimism bias. Pessimists over-weigh negative consequences with their own and others' actions.

Placebo effect

Where believing that something is happening helps cause it to happen.

This is a basic principle of stock market cycles, as well as a supporting feature of medical treatment in general.

Post-purchase rationalization

Making ourselves believe that a purchase was worth the value after the fact.

Priming

Priming is where if you're introduced to an idea, you'll more readily identify related ideas.

For instance, if you show somebody the word water, they'll be more likely to identify the words river, cup, or splash afterward.

Pro-innovation bias

When a proponent of an innovation tends to overvalue its usefulness and undervalue its limitations. Sound familiar, Silicon Valley?

Reactance

The desire to do the opposite of what someone wants you to do, in order to prove your freedom of choice.

Reciprocity

The belief that fairness should trump other values, even when it's not in our interests.

Regression bias

People take action in response to extreme situations. Then when the situations become less extreme, they take credit for causing the change, when a more likely explanation is that the situation was reverting to the mean.

Seersucker illusion

Over-reliance on expert advice.

We call in "experts" to forecast when typically they have no greater chance of predicting an outcome than the rest of the population.

In other words, "for every seer there's a sucker."

Self-enhancing transmission bias

Everyone shares their successes more than their failures. This leads to a false perception of reality and inability to accurately assess situations.

It's also why people seem way happier on Instagram than anyone could be in real life.

Status quo bias

The tendency to prefer things to stay the same. This is similar to loss-aversion bias, where people prefer to avoid losses instead of acquiring gains.

Stereotyping

Expecting a group or person to have certain qualities without having real information about the individual.

While there may be some value to stereotyping, people tend to overuse it.

Survivorship bias

An error that comes from focusing only on surviving examples, causing us to misjudge a situation.

For instance, we might think that being an entrepreneur is easy because we haven't heard of all of the entrepreneurs who have failed.

Tragedy of the commons

We overuse common resources because it's not in any individual's interest to conserve them. This explains the overuse of natural resources.

Unit bias

We believe that there is an optimal unit size, or a universally acknowledged amount of a given item that is perceived as appropriate. This explains why when served larger portions, we eat more.

Zero-risk bias

Sociologists have found that we love certainty — even if its counter productive.

Thus the zero-risk bias.

"Zero-risk bias occurs because individuals worry about risk, and eliminating it entirely means that there is no chance of harm being caused," says decision science blogger Steve Spaulding. "What is economically efficient and possibly more relevant, however, is not bringing risk from 1% to 0%, but from 50% to 5%."

Now apply that decision-making knowledge to your career

Popular Right Now

Popular Keywords

Advertisement