20 cognitive biases that screw up your decisions

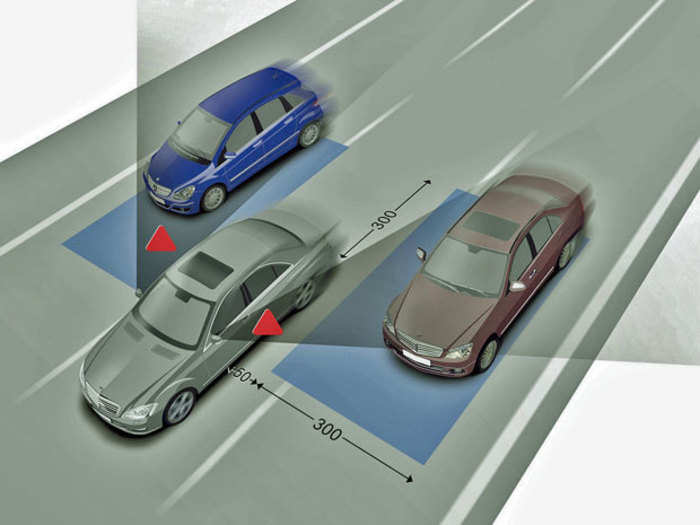

Anchoring bias

Availability heuristic

When people overestimate the importance of information that is available to them.

For instance, a person might argue that smoking is not unhealthy on the basis that his grandfather lived to 100 and smoked three packs a day.

Bandwagon effect

The probability of one person adopting a belief increases based on the number of people who hold that belief. This is a powerful form of groupthink — and it's a reason meetings are often unproductive.

Blind-spot bias

Failing to recognize your cognitive biases is a bias in itself.

Notably, Princeton psychologist Emily Pronin has found that "individuals see the existence and operation of cognitive and motivational biases much more in others than in themselves."

Choice-supportive bias

When you choose something, you tend to feel positive about it, even if the choice has flaws. You think that your dog is awesome — even if it bites people every once in a while — and that other dogs are stupid, since they're not yours.

Clustering illusion

This is the tendency to see patterns in random events. It is central to various gambling fallacies, like the idea that red is more or less likely to turn up on a roulette table after a string of reds.

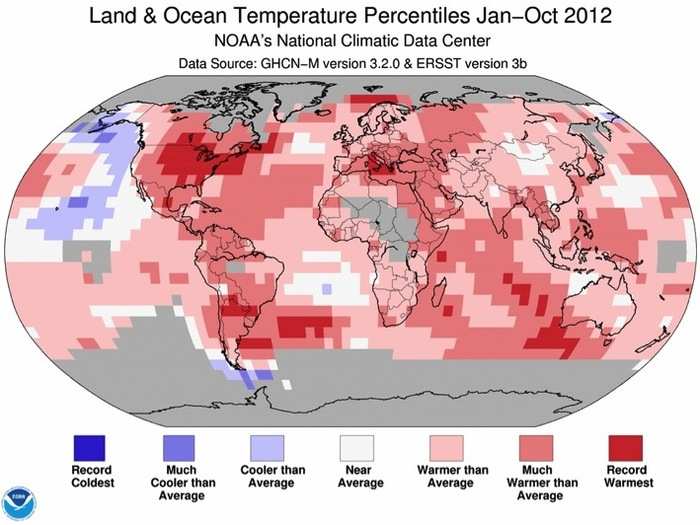

Confirmation bias

We tend to listen only to the information that confirms our preconceptions — one of the many reasons it's so hard to have an intelligent conversation about climate change.

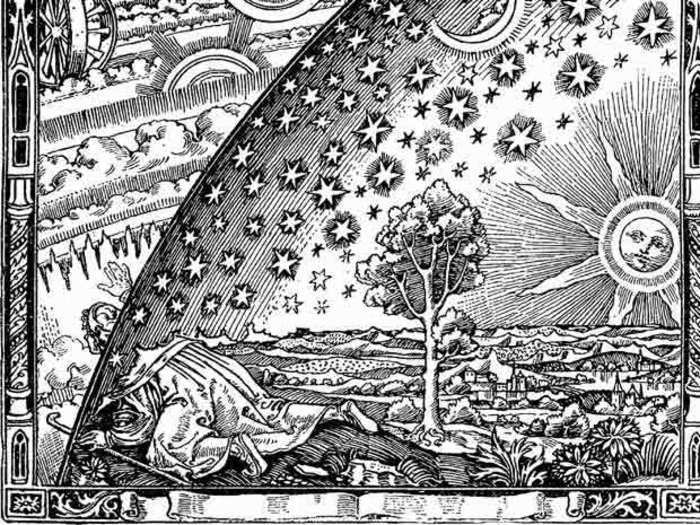

Conservatism bias

Where people believe prior evidence more than new evidence or information that has emerged. People were slow to accept the fact that the Earth was round because they maintained their earlier understanding that the planet was flat.

Information bias

The tendency to seek information when it does not affect action. More information is not always better. Indeed, with less information, people can often make more accurate predictions.

Ostrich effect

The decision to ignore dangerous or negative information by "burying" one's head in the sand, like an ostrich. Research suggests that investors check the value of their holdings significantly less often during bad markets.

But there's an upside to acting like a big bird, at least for investors. When you have limited knowledge about your holdings, you're less likely to trade, which generally translates to higher returns in the long run.

Outcome bias

Judging a decision based on the outcome — rather than how exactly the decision was made in the moment. Just because you won a lot in Vegas doesn't mean gambling your money was a smart decision.

Overconfidence

Some of us are too confident about our abilities, and this causes us to take greater risks in our daily lives.

Perhaps surprisingly, experts are more prone to this bias than laypeople. An expert might make the same inaccurate prediction as someone unfamiliar with the topic — but the expert will probably be convinced that he's right.

Placebo effect

When simply believing that something will have a certain impact on you causes it to have that effect.

This is a basic principle of stock market cycles, as well as a supporting feature of medical treatment in general. People given "fake" pills often experience the same physiological effects as people given the real thing.

Pro-innovation bias

When a proponent of an innovation tends to overvalue its usefulness and undervalue its limitations. Sound familiar, Silicon Valley?

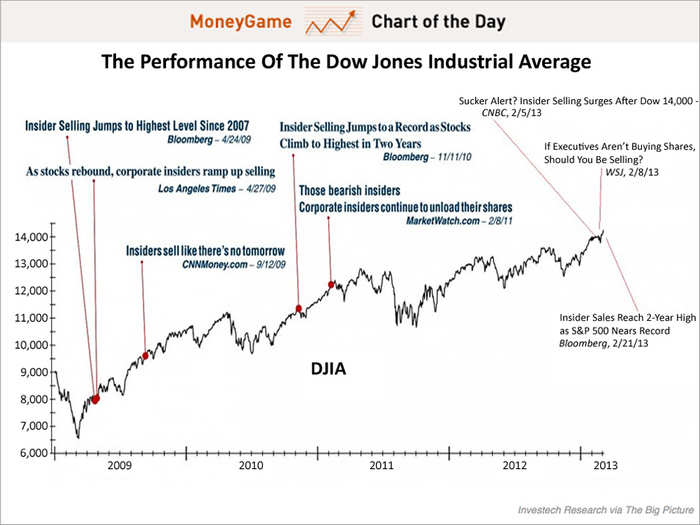

Recency

The tendency to weigh the latest information more heavily than older data.

As financial planner Carl Richards writes in The New York Times, investors often think the market will always look the way it looks today and therefore make unwise decisions: "When the market is down, we become convinced that it will never climb out so we cash out our portfolios and stick the money in a mattress."

Salience

Our tendency to focus on the most easily recognizable features of a person or concept.

When you think about dying, for example, you might worry about being mauled by a lion, even though dying in a car accident is statistically more likely, because the lion attacks you've heard about are more dramatic and stand out in your mind.

Selective perception

Allowing our expectations to influence how we perceive the world.

In a classic experiment on selective perception, researchers showed a video clip of a football game between Princeton and Dartmouth Universities to students from both schools. Results showed that Princeton students saw Dartmouth players commit more infractions than Dartmouth students saw. The researchers wrote: "The 'game' exists for a person and is experienced by him only in so far as certain happenings have significances in terms of his purpose."

Stereotyping

Expecting a group or person to have certain qualities without having real information about the individual.

There may be some value to stereotyping because it allows us to quickly identify strangers as friends or enemies. But people tend to overuse it — for example, thinking low-income individuals aren't as competent as higher-income people.

Survivorship bias

An error that comes from focusing only on surviving examples, causing us to misjudge a situation.

For instance, we might think that being an entrepreneur is easy because we haven't heard of all of the entrepreneurs who have failed.

Zero-risk bias

Sociologists have found that we love certainty — even if it's counter productive.

Thus the zero-risk bias.

"Zero-risk bias occurs because individuals worry about risk, and eliminating it entirely means that there is no chance of harm being caused," says decision science blogger Steve Spaulding. "What is economically efficient and possibly more relevant, however, is not bringing risk from 1% to 0%, but from 50% to 5%."

Popular Right Now

Advertisement