- Home

- slideshows

- miscellaneous

- Welcome to deepfake hell: How realistic-looking fake videos left the uncanny valley and entered the mainstream

Welcome to deepfake hell: How realistic-looking fake videos left the uncanny valley and entered the mainstream

The term "deepfake" originated from a Reddit user who claims to have developed a machine learning algorithm that helped him transpose celebrity faces into porn videos.

Immediately, speculation and concern about the technology's potential wider uses began.

The videos sparked concern about potential future uses of the technology and its ethics.

Immediately at issue was the question of consent. More alarming was the potential for blackmail, and the application of the technology to those in power.

In 2017, months before pornography deepfakes surfaced, a team of researchers at the University of Washington made headlines when they released a video of a computer-generated Barack Obama speaking from old audio or video clips.

At the time, the risks around the spread of misinformation were clear, but seemed far off given that it was academic researchers producing the videos.

The consumer-level creations added an alarming urgency to the risks at hand.

In January 2018, a deepfake creation desktop application called FakeApp launched, bringing deepfakes to the masses. A dedicated subreddit for deepfakes also gained popularity.

In January 2018, shortly after the pornographic deepfakes surfaced, FakeApp, a desktop application for deepfake creation, became available for download. The software was originally being peddled by a user called deepfakeapp on Reddit and used Google's TensorFlow framework — the same tool used by Reddit user deepfakes.

The readily available technology helped boost the dedicated deepfakes subreddit that sprung up following the original deepfakes Vice article.

Those who used the software, which was linked and explained in the subreddit, shared their own creations and comment on others. Most of it was reportedly pornography, but other videos were lighter hearted, featuring random movie scenes with the actor swapped in with Nicholas Cage's face.

Platforms begin to explicitly ban deepfakes after Vice reported on revenge porn created with the technology.

In late January 2018, Vice ran a follow-up piece identifying instances of deepfakes made with the faces people allegedly knew from high school or other venues, and possibly revenge porn.

The pornography was seemingly in a gray area of revenge porn laws, given that the videos weren't actual recording of real people, but something closer to mashups.

The identified posts were found on Reddit and the chat app Discord.

Following the revelation, numerous platforms including Twitter, Discord, Gfycat, and Pornhub explicitly banned deepfakes and associated communities. Gfycat in particular announced that it was using AI detection methods in an attempt to proactively police deepfakes.

Reddit waited until February 2018 to ban the deepfakes subreddit and update its policies to broadly ban pornographic deepfakes.

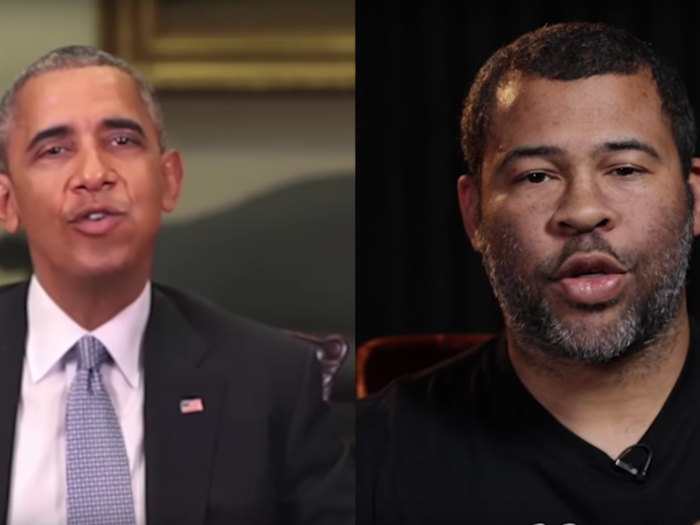

In April 2018, BuzzFeed took deepfakes to their logical conclusion by creating a video of Barack Obama saying words that weren't his own.

In April 2018, BuzzFeed published a frighteningly realistic video that went viral of a Barack Obama deepfake that it had commissioned. Unlike the University of Washington video, Obama was made to say words that weren't his own.

The video was made by a single person using FakeApp, which reportedly took 56 hours to scrape and aggregate a model of Obama. While it was transparent about being a deepfake, it was a warning shot for the dangerous potential of the technology.

In the last year, several manipulated videos of politicians and other high-profile individuals have gone viral, highlighting the continued dangers of deepfakes, and forcing large platforms to take a position on the technology.

Following BuzzFeed's disturbingly realistic Obama deepfake, instances of manipulated videos of high-profile subjects began to go viral, and seemingly fool millions of people.

Despite most of the videos being even more crude than deepfakes — using rudimentary film editing rather than AI — the videos sparked sustained concern about the power of deepfakes and other forms of video manipulation, while forcing technology companies to take a stance on what to do with such content.

In July 2018, over a million people watched an edited video of an Alexandria Ocasio-Cortez interview that made her appear as if she lacked answers to numerous questions.

In July 2018, an edited video of an interview with Alexandria Ocasio-Cortez went viral. It now has over 4 million views.

The video, which cuts the original interview and inserts a different host instead, makes it appear as if Ocasio-Cortez struggled to answer basic questions. The video blurred the line between satire and something that could be a sincere effort at smearing Ocasio-Cortez.

According to The Verge, commenters responded with statements like, "complete moron" and "dumb as a box of snakes," making it unclear how many people were actually fooled.

While not a deepfake, the video came on the early end of concerns over video misinformation.

In May 2019, a slowed down video of Nancy Pelosi got millions of views and inspired online speculation that she was drunk. Facebook publicly refused to take it down.

In May 2019, a slowed down video of Democratic Speaker of the House Nancy Pelosi went viral on Facebook and Twitter. The video was slowed down to make her appear as if she was slurring her speech, and inspired commenters to question Pelosi's mental state.

The video, while not a deepfake, was one of the most effective video manipulations targeting a top government official, attracting over 2 million views and clearly tricking many commenters.

The viral danger of video manipulation was on full display when Trump's personal lawyer Rudy Giuliani shared the video, ahead of Trump tweeting out another edited video of Pelosi.

Despite the fact that the video was fake, Facebook publicly refused to remove it, instead tossing the duty to its third-party fact-checkers, who can only produce information that appears alongside the video. In response, Pelosi jabbed at Facebook, saying "they wittingly were accomplices and enablers of false information to go across Facebook."

The video has since disappeared from Facebook, but Facebook maintains that it didn't delete it.

In June 2019, a deepfake of Mark Zuckerberg appeared on Instagram. Facebook also decided to leave it up, setting a precedent for leaving manipulated videos on their platforms.

Shortly after Pelosi's brush with fake virality, a deepfake of Mark Zuckerberg surfaced on Instagram, portraying a CBSN segment that never happened, where Zuckerberg appears to be saying, "Imagine this for a second: One man, with total control of billions of people's stolen data, all their secrets, their lives, their futures. I owe it all to Spectre. Spectre showed me that whoever controls the data, controls the future." Spectre was an art exhibition that featured several deepfakes made by artist Bill Posters and an advertising company. Posters says the video was a critique of big tech.

Despite a trademark claim from CBSN, Facebook refused to take the video down, telling Vice, "We will treat this content the same way we treat all misinformation on Instagram. If third-party fact-checkers mark it as false, we will filter it from Instagram's recommendation surfaces like Explore and hashtag pages."

Later, multiple Facebook fact-checkers flagged the video, which reduced the video's distribution. In response, the artist who made the video criticized the decision, saying, "How can we engage in serious exploration and debate about these incredibly important issues if we can't use art to critically interrogate the tech giants?"

In September 2018, lawmakers asked the Director of National Intelligence to report on the threat of deepfakes following the Pentagon's move to fund research into deepfake-detection technology.

In September 2018, Rep. Adam Schiff of California, Rep. Stephanie Murphy of Florida, and Rep. Carlos Curbelo of Florida asked the Director of National Intelligence to "report to Congress and the public about the implications of new technologies that allow malicious actors to fabricate audio, video, and still images."

Specifically, the representatives raised the possible threats of blackmail and disinformation, asking for a report by December 2018.

Previously, numerous Senators had mentioned deepfakes in hearings with Facebook and even confirmation hearings.

The Pentagon's Defense Advanced Research Projects Agency (DARPA) began funding research into technologies that could detect photo and video manipulation in 2016. Deepfakes seemingly became an area of focus in 2018.

In June 2019, the House finally had a hearing on deepfakes.

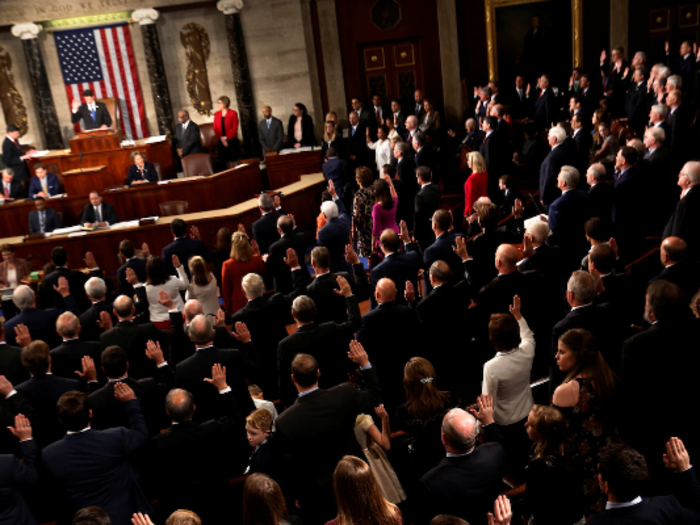

At a House Intelligence Committee hearing in June 2019, lawmakers finally heard official testimony around deepfakes, and committed to examining "the national security threats posed by AI-enabled fake content, what can be done to detect and combat it, and what role the public sector, the private sector, and society as a whole should play to counter a potentially grim, 'post-truth' future."

In the hearing, Rep. Schiff urged tech companies to "put in place policies to protect users from misinformation" before the 2020 elections.

Results have been mixed in trials of technology and policies developed to prevent deepfakes.

Platforms who have had to deal with deepfakes along with the Pentagon have been working on technology to detect and flag deepfakes, but results have been mixed.

In June 2018, a Vice investigation found that deepfakes were still being hosted on Gfycat despite their detection technology. Gfycat reportedly removed deepfakes that were flagged by Vice, but they remained up after being re-uploaded in an experiment by journalist Samantha Cole.

Pornhub has also struggled with enforcement. At the time of this writing, the first result on a simple Google search of "deepfakes on pornhub" points to a playlist of 23 deepfake-style pornographic videos hosted on Pornhub that features the superimposed faces of Nicki Minaj, Scarlett Johansson, and Ann Coulter in explicit videos. Pornhub did not immediately respond to request for comment regarding the videos in question.

Technologies that have been specifically developed to detect deepfakes are fallible, according to experts. Siwei Lyu, of the State University of New York at Albany, told MIT Tech Review that technology developed by his team (funded by DARPA) could recognize deepfakes by detecting the lack of blinks on the eyes in deepfake videos, because oftentimes blinking faces aren't included in training datasets. Lyu explained that the technology would most likely be rendered useless if pictures of blinking figures were eventually included when training AI.

Other teams working off of DARPA's initiative are using similar cues, such as head movements, to attempt to detect deepfakes, but each time the inner workings of detection technology are revealed, forgers gain another foothold toward avoiding detection.

Deepfakes vary in quality and intention, which make the future hard to predict.

Despite the serious nature of recent video manipulations and professional deepfakes, the practice is growing.

A recent deepfake, which was clearly identifiable as a manipulation, of Jon Snow apologizing for the final season of "Game of Thrones," illustrated the expanding coverage and mixed use of deepfakes.

The technology, while posing a threat on multiple fronts, can also be legitimately used for satire, comedy, art, and critique.

The conundrum is sure to develop as platforms continue to grapple with issues of consent, free expression, and preventing the spread of misinformation.

Popular Right Now

Advertisement