61 cognitive biases that screw up everything we do

Shana Lebowitz,Allana Akhtar,Marguerite Ward

- Hundreds of biases cause humans to behave irrationally.

- For instance, the denomination effect is when people are less likely to spend large bills than their equivalent value in small bills or coins.

- Overconfidence is when some of us are too confident about our abilities, and this causes us to take greater risks in our daily lives.

- Visit Business Insider's homepage for more stories.

We like to think we're rational human beings — but in reality, we are prone to hundreds of proven biases that cause us to think and act irrationally.

Even thinking we're rational despite evidence of irrationality in others is known as blind-spot bias.

The study of how often human beings do irrational things was enough for psychologist Daniel Kahneman to win the Nobel Prize in Economics, and it opened the rapidly expanding field of behavioral economics. Similar insights are also reshaping everything from marketing to criminology.

Hoping to clue you — and ourselves — into the biases that frame our decisions, we've collected a long list of the most notable ones.

This is an update of an article that was previously published with additional contributions by Drake Baer and Gus Lubin.

Read the original article on Business InsiderThe rhyme-as-reason effect occurs when your brain finds rhyming statements more accurate.

Proverbs or statements that rhyme sound more truthful to humans, psychologists find.

The most infamous use of the rhyme-as-a-reason effect occurred during the O.J. Simpson trial, when the NFL legend was tried for allegedly murdering his ex-wife Nicole Brown Simpson.

After Simpson failed to fit into the gloves stained with blood, his lawyer, Johnnie Cochran, said: "If the glove doesn't fit, you must acquit." The jury later found Simpson not guilty.

The "well-traveled road" is why we underestimate the time it takes to get to work on your daily commute, but arrive at the airport hours ahead for a trip you've never taken before.

"Well-traveled roads," or routes you travel regularly, feel shorter than a journey you've never taken before, psychologists say.

As a result, humans tend to underestimate the time it will take to get somewhere on a commute they take regularly, but they will overestimate the time it takes to travel on an unfamiliar route.

This is related to the feeling that "time flies" when you engage in automatic, routine tasks, according to psychologist and author Jeremy Dean. "Familiarity, then, with routes traveled, holidays and work activities, tends to speed up our perception of time," Dean wrote on PsyBlog.

The fallacy of sunk costs describes the mistake thinking that just because we've worked on something for a long time, we should keep working on it, even if the costs of continuing to work on it outweigh the benefits.

Someone blinded by the fallacy of sunk costs focuses on the time, money, or effort that's already been lost by doing something and use it as a reason to keeping going. They fail to see the future costs of sticking with what they're doing.

For example, say you've spent 10 hours trying to fix your computer, but haven't been able to get it to work. Instead of giving up and calling a professional, or looking into buying a new computer, you keep working on it. Afterall, you've already spent so much time, you might as well keep going, right?

Wrong. Instead of cutting your losses, you keep obsessing over it. You miss your son's baseball practice and your family dinner. The computer is still not fixed. Now, not only have you lost the original 10 hours you spent, you've lost even more time, as well as the opportunity to be with your family.

Zero-risk bias occurs when we choose to eliminate risk absolutely in one area, rather than eliminate more risk spread out across different areas.

Sociologists have found that we love certainty — even if it's counterproductive.

Thus the zero-risk bias.

In general, people tend to prefer approaches that eliminate some risks completely, as opposed to approaches that reduce all risks — even though the second option would produce a greater overall decrease in risk.

Unit bias occurs when people think a particular size is the optimal amount.

We believe that there is an optimal unit size, or a universally acknowledged amount of a given item that is perceived as appropriate. This explains why when served larger portions, we eat more.

Tragedy of the commons occurs when individuals use public resources in their own self interest rather than for the common good.

We overuse common resources because it's not in any individual's interest to conserve them. This explains the overuse of natural resources, opportunism, and any acts of self-interest over collective interest.

Survivorship bias occurs when individuals focus on successful outcomes, yet overlook failure.

Survivorship bias is an error that comes from focusing only on surviving examples, causing us to misjudge a situation. For instance, we might think that being an entrepreneur is easy because we haven't heard of all of the entrepreneurs who have failed.

It can also cause us to assume that survivors are inordinately better than failures, without regard for the importance of luck or other factors.

Stereotyping occurs when people generalize characteristics about others based on the groups they belong to.

Stereotyping occurs when we expect a group or person to have certain qualities without having real information about the individual.

There may be some value to stereotyping because it allows us to quickly identify strangers as friends or enemies. But people tend to overuse it.

For example, one study found that people were more likely to hire a hypothetical male candidate over a female candidate to perform a mathematical task, even when they learned that the candidates would perform equally well.

Status quo bias is the tendency to prefer things to stay the same.

This is similar to loss-aversion bias, where people prefer to avoid losses instead of acquiring gains.

Self-enhancing transmission bias occurs when everyone shares their successes more than their failures.

Self-enhancing transmission bias leads to a false perception of reality and inability to accurately assess situations.

Selective attention occurs when we allow our expectations to influence how we perceive the world.

The classic study on selective attention is called the "invisible gorilla" experiment. Psychologists Christopher Chabris and Daniel Simons created a short film in which a team wearing white and a team wearing black pass basketballs. Participants are asked to count the number of passes made by either the white or the black team. Halfway through the video, a woman wearing a gorilla suit crosses the court, thumps her chest, and walks off screen. She's on screen for a total of nine seconds.

About half of the thousands of people who have watched the video (you can watch it here) don't notice the gorilla, presumably because they're so wrapped up in counting the basketball passes.

Of course, when asked if they would notice the gorilla in this situation, nearly everyone says they would.

Seersucker illusion is the over-reliance on expert advice.

Seersucker illusion has to do with the avoidance of responsibility. We call in "experts" to forecast when typically they have no greater chance of predicting an outcome than the rest of the population. In other words, "for every seer there's a sucker."

Scope insensitivity is where your willingness to pay for something doesn't correlate with the scale of the outcome.

From Less Wrong:

Once upon a time, three groups of subjects were asked how much they would pay to save 2,000 / 20,000 / 200,000 migrating birds from drowning in uncovered oil ponds. The groups respectively answered $80, $78, and $88. This is scope insensitivity or scope neglect: the number of birds saved — the scope of the altruistic action — had little effect on willingness to pay.

Salience is our tendency to focus on the most easily recognizable features of a person or concept.

For example, research suggests that when there's only one member of a racial minority on a business team, other members use that individual's performance to predict how any member of that racial group would perform.

Restraint bias occurs when we overestimate our capacity for impulse control.

With restraint bias, one overestimates one's ability to show restraint in the face of temptation.

Regression bias occurs when people take action in response to extreme situations. When the situations become less extreme, they take credit for causing the change, when a more likely explanation is that the situation was reverting to the mean.

In "Thinking, Fast and Slow," Kahneman gives an example of how the regression bias plays out in real life. An instructor in the Israeli Air Force asserted that when he chided cadets for bad execution, they always did better on their second try. The instructor believed that his reprimands were the cause of the improvement.

Yet Kahneman told him he was really observing regression to the mean, or random variations in the quality of performance. If you perform really badly one time, it's highly probable that you'll do better the next time, even if you do nothing to try to improve.

Reciprocity is the belief that fairness should trump other values, even when it's not in our economic or other interests.

We learn the reciprocity norm from a young age, and it affects all kinds of interactions. One study found that, when restaurant waiters gave customers extra mints, the customers upped their tips. That's likely because the customers felt obligated to return the favor.

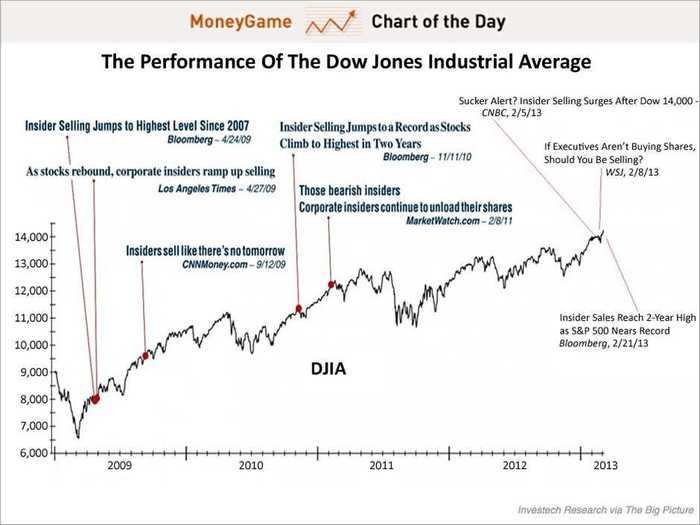

Recency is the tendency to weigh the latest information more heavily than older data.

As financial planner Carl Richards writes in The New York Times, investors often think the market will always look the way it looks today and therefore make unwise decisions: "When the market is down we become convinced that it will never climb out, so we cash out our portfolios and stick the money in a mattress."

Reactance refers to the desire to do the opposite of what someone wants you to do, in order to prove your freedom of choice.

One study found that when people saw a sign that read, "Do not write on these walls under any circumstances," they were more likely to deface the walls than when they saw a sign that read, "Please don't write on these walls." The study authors say that's partly because the first sign posed a greater perceived threat to people's freedom.

Procrastination occurs when you decide to act in favor of the present moment over investing in the future.

For example, even if your goal is to lose weight, you might still go for a thick slice of cake today and say you'll start your diet tomorrow.

That happens largely because, when you set the weight-loss goal, you don't take into account that there will be many instances when you're confronted with cake and you don't have a plan for managing your future impulses.

Pro-innovation bias occurs when a proponent of an innovation tends to overvalue its usefulness and undervalue its limitations.

Sound familiar, Silicon Valley?

Priming is when you more readily identify ideas related to a previously introduced idea.

Let's take an experiment as an example, again from Less Wrong:

Suppose you ask subjects to press one button if a string of letters forms a word, and another button if the string does not form a word. (E.g., "banack" vs. "banner".) Then you show them the string "water." Later, they will more quickly identify the string "drink" as a word. This is known as "cognitive priming" ...

Priming also reveals the massive parallelism of spreading activation: if seeing "water" activates the word "drink," it probably also activates "river," or "cup," or "splash."

Post-purchase rationalization is when we overlook an expensive item's flaws to justify the purchase.

Post-purchase rationalization is when we make ourselves believe that a purchase was worth the value after the fact.

Planning fallacy is the tendency to underestimate how much time it will take to complete a task.

According to Kahneman, people generally think they're more capable than they actually are and have greater power to influence the future than they really do. For example, even if you know that writing a project report typically takes your coworkers several hours, you might believe that you can finish it in under an hour because you're especially skilled.

Placebo effect is when simply believing that something will have a certain impact on you causes it to have that effect.

This is a basic principle of stock market cycles, as well as a supporting feature of medical treatment in general. People given "fake" pills often experience the same physiological effects as people given the real thing.

Pessimism bias occurs when individuals overestimate how often negative things will happen to them.

This is the opposite of the overoptimism bias. Pessimists over-weigh negative consequences with their own and others' actions.

Those who are depressed are more likely to exhibit the pessimism bias.

Overoptimism occurs when individuals believe they are less likely to encounter negative events.

When we believe the world is a better place than it is, we aren't prepared for the danger and violence we may encounter. The inability to accept the full breadth of human nature leaves us vulnerable.

On the flip side, overoptimism may have some benefits — hopefulness tends to improve physical health and reduce stress. In fact, researchers say we're basically hardwired to underestimate the probability of negative events — meaning this bias is especially hard to overcome.

Overconfidence is when some of us are too confident about our abilities, and this causes us to take greater risks in our daily lives.

Perhaps surprisingly, experts are more prone to this bias than laypeople. An expert might make the same inaccurate prediction as someone unfamiliar with the topic — but the expert will probably be convinced that he's right.

Outcome bias refers to judging a decision based on the outcome, rather than how exactly the decision was made in the moment.

Just because you won a lot in Vegas doesn't mean gambling your money was a smart decision.

Research illustrates the power of the outcome bias on the way we evaluate decisions.

In one study, students were asked whether a particular city should have paid for a full-time bridge monitor to protect against debris getting caught and blocking the flow of water. Some students only saw the information that was available at the time of the city's decision; others saw the information that was available after the decision was already made: debris had blocked the river and caused flood damage.

As it turns out, 24% of students in the first group (with limited information) said the city should have paid for the bridge, compared to 56% of students in the second group (with all information). Hindsight had affected their judgment.

The ostrich effect is the decision to ignore dangerous or negative information by "burying" one's head in the sand, like an ostrich.

Research suggests that investors check the value of their holdings significantly less often during bad markets.

But there's an upside to acting like a big bird, at least for investors. When you have limited knowledge about your holdings, you're less likely to trade, which generally translates to higher returns in the long run.

Omission bias is the tendency to prefer inaction to action, in ourselves and even in politics.

Psychologist Art Markman gave a great example back in 2010:

The omission bias creeps into our judgment calls on domestic arguments, work mishaps, and even national policy discussions. In March, President Obama pushed Congress to enact sweeping healthcare reforms. Republicans hope that voters will blame Democrats for any problems that arise after the law is enacted. But since there were problems with healthcare already, can they really expect that future outcomes will be blamed on Democrats, who passed new laws, rather than Republicans, who opposed them? Yes, they can — the omission bias is on their side.

The observer-expectancy effect is when a researcher's expectations impact the outcome of an experiment.

A cousin of confirmation bias, here our expectations unconsciously influence how we perceive an outcome. Researchers looking for a certain result in an experiment, for example, may inadvertently manipulate or interpret the results to reveal their expectations.

That's why the "double-blind" experimental design was created for the field of scientific research.

Negativity bias is the tendency to put more emphasis on negative experiences rather than positive ones.

People with this bias feel that "bad is stronger than good" and will perceive threats more than opportunities in a given situation.

Psychologists argue it's an evolutionary adaptation: it's better to mistake a rock for a bear than a bear for a rock.

In modern times, the negativity bias has meaningful implications for our relationships. John Gottman, a relationship expert, found that a stable relationship requires that good experiences occur at least five times more often than bad experiences.

Irrational escalation is when people make irrational decisions based on past rational decisions.

It may happen in an auction, when a bidding war spurs two bidders to offer more than they would otherwise be willing to pay.

Inter-group bias is when we view people in our group differently from how see we someone in another group.

This bias helps illuminate the origins of prejudice and discrimination.

Unfortunately, researchers say we aren't always aware of our preference for people in our social group.

Information bias is the tendency to seek information when it does not affect action.

More information is not always better. Indeed, with less information, people can often make more accurate predictions.

In one study, people who knew the names of basketball teams as well as their performance records made less accurate predictions about the outcome of NBA games than people who only knew the teams' performance records. However, most people believed that knowing the team names was helpful in making their predictions.

Illusion of control is when people overestimate how much control they have over certain situations.

Illusion of control is the tendency for people to overestimate their ability to control events, like when a sports fan thinks his thoughts or actions had an effect on the game.

Ideomotor effect occurs when the body reacts to ideas alone.

Where an idea causes you to have an unconscious physical reaction, like a sad thought that makes your eyes tear up. This is also how Ouija boards seem to have minds of their own.

Hyperbolic discounting happens when people make decisions for a smaller reward sooner, rather than a greater reward later.

Hyperbolic discounting is the tendency for people to want an immediate payoff rather than a larger gain later on.

Hindsight bias is when people claim to have predicted an outcome that was impossible to predict at the time.

Of course Apple and Google would become the two most important companies in phones — but tell that to Nokia, circa 2003.

One classic experiment on hindsight bias took place in the 1970s, when President Richard Nixon was about to depart for trips to China and the Soviet Union. Researchers asked the participants to predict various outcomes. After the trips, researchers asked participants to recall the probabilities that had initially assigned to each outcome.

Results showed that participants remembered having rated the events unlikely if the event had not occurred, and remembered having rated the events likely if the event had occurred.

Herding occurs when individuals mirror the sometimes irrational actions of a group.

People tend to flock together, especially in difficult or uncertain times.

Hard-easy bias occurs when individuals underestimate their ability to perform easy tasks, yet overestimate their ability to perform more difficult ones.

Hard-easy bias occurs when everyone is overconfident on hard problems and not confident enough for easy problems.

Halo effect is when we take one positive attribute of someone and associate it with everything else about that person or thing.

It helps explain why we often assume highly attractive individuals are also good people, why they tend to get hired more easily, and why they earn more money.

Galatea effect occurs when people succeed — or underperform — because they think they should.

Call it a self-fulfilling prophecy. For example, in schools it describes how students who are expected to succeed tend to excel and students who are expected to fail tend to do poorly.

Fundamental attribution error is where you attribute a person's behavior to an intrinsic quality of her identity rather than the situation she's in.

For instance, you might think your colleague is an angry person, when she is really just upset because she stubbed her toe.

Frequency illusion occurs when a word, name or thing you just learned about suddenly appears everywhere.

Now that you know what that SAT word means, you see it in so many places!

Empathy gap occurs when people in one state of mind fail to understand people in another state of mind.

If you are happy, you can't imagine why people would be unhappy. When you are not sexually aroused, you can't understand how you act when you are sexually aroused.

Duration neglect occurs when the duration of an event doesn't factor enough into the way we consider it.

For instance, we remember momentary pain just as strongly as long-term pain.

Kahneman and colleagues tracked patients' pain during colonoscopies (they used to be more uncomfortable) and found that the end of the procedure pretty much determined patients' evaluations of the entire experience. One set of patients underwent a shorter procedure in which the end was relatively painful. The other set of patients underwent a longer procedure in which the end was less painful.

Results showed that the second set of patients (the longer colonoscopy) rated the procedure as less painful overall.

Denomination effect is when people are less likely to spend large bills than their equivalent value in small bills or coins.

The phenomenon is typically seen with currency.

Decoy effect is a phenomenon in marketing where consumers have a specific change in preference between two choices after being presented with a third choice.

In his TED Talk, behavioral economist Dan Ariely explains the "decoy effect" using an old Economist advertisement as an example.

The ad featured three subscription levels: $59 for online only, $159 for print only, and $159 for online and print. Ariely figured out that the option to pay $159 for print only exists so that it makes the option to pay $159 for online and print look more enticing than it would if it was just paired with the $59 option.

Curse of knowledge means that when people know something, it's hard to imagine not knowing it.

People who are more well-informed cannot understand the common man. For instance, in the TV show "The Big Bang Theory," it's difficult for scientist Sheldon Cooper to understand his waitress neighbor Penny.

Conservatism bias occurs when people believe prior evidence more than new evidence.

Conservatism bias is where people believe prior evidence more than new evidence or information that has emerged. People were slow to accept the fact that the Earth was round because they maintained their earlier understanding the planet was flat.

Conformity describes how people tend to behave similarly to other people.

This is the tendency of people to conform with other people. It is so powerful that it may lead people to do ridiculous things, as shown by the following experiment by Solomon Asch.

Ask one subject and several fake subjects (who are really working with the experimenter) which of lines B, C, D, and E is the same length as A. If all of the fake subjects say that D is the same length as A, the real subject will agree with this objectively false answer a shocking three-quarters of the time

"That we have found the tendency to conformity in our society so strong that reasonably intelligent and well-meaning young people are willing to call white black is a matter of concern,"Asch wrote. "It raises questions about our ways of education and about the values that guide our conduct."

Confirmation bias describes the tendency to only listen to information that confirms our preconceptions.

We tend to listen only to the information that confirms our preconceptions. Once you've formed an initial opinion about someone, it's hard to change your mind.

For example, researchers had participants watch a video of a student taking an academic test. Some participants were told that the student came from a high socioeconomic background; others were told the student came from a low socioeconomic background. Those in the first condition believed the student's performance was above grade level, while those in the second condition believed the student's performance was below.

If you know some information about a job candidate's background, you might be inclined to use that information to make false judgments about his or her ability.

The clustering illusion happens when we see trends in random events that happen close together.

This is the tendency to see patterns in random events. It is central to various gambling fallacies, like the idea that red is more or less likely to turn up on a roulette table after a string of reds.

Choice-supportive bias describes the tendency to have positive attitudes about the things or ideas we choose, even when they are flawed.

When you choose something, you tend to feel positive about it, even if the choice has flaws. You think that your dog is awesome — even if it bites people every once in a while — and that other dogs are stupid, since they're not yours.

Bias blind spots describes how individuals can see bias in others, but struggle to see their own biases.

Failing to recognize your cognitive biases is a bias in itself.

Notably, Princeton psychologist Emily Pronin has found that "individuals see the existence and operation of cognitive and motivational biases much more in others than in themselves."

The bandwagon effect describes when people do something simply because others are also doing it.

The probability of one person adopting a belief increases based on the number of people who hold that belief. This is a powerful form of groupthink — and it's a reason meetings are often so unproductive.

Availability heuristic describes a shortcut where people make decisions based on information that's easier to remember.

In one experiment, a professor asked students to list either two or 10 ways to improve his class. Students that had to come up with 10 ways gave the class much higher ratings, likely because they had a harder time thinking about what was wrong with the class.

This phenomenon could easily apply in the case of job interviews. If you have a hard time recalling what a candidate did wrong during an interview, you'll likely rate him higher than if you can recall those things easily.

Anchoring bias means people rely too heavily on the first piece of information they hear when making decisions.

People are over-reliant on the first piece of information they hear.

In a salary negotiation, for instance, whoever makes the first offer establishes a range of reasonable possibilities in each person's mind. Any counteroffer will naturally react to or be anchored by that opening offer.

"Most people come with the very strong belief they should never make an opening offer," said Leigh Thompson, a professor at Northwestern University's Kellogg School of Management. "Our research and lots of corroborating research shows that's completely backwards. The guy or gal who makes a first offer is better off."

The affect heuristic describes how humans sometimes make decisions based on emotion.

The psychologist Paul Slovic coined this term to describe the way people let their emotions color their beliefs about the world. For example, your political affiliation often determines which arguments you find persuasive.

Our emotions also affect the way we perceive the risks and benefits of different activities. For example, people tend to dread developing cancer, so they see activities related to cancer as much more dangerous than those linked to less dreaded forms of death, illness, and injury, such as accidents.

READ MORE ARTICLES ON

Popular Right Now

Popular Keywords

Advertisement